VISION STATEMENT

Let's talk about what's right in front of our faces. Right now, what's going on right in front of your face? There’s things that you’re seeing (this screen, me, your phone, etc), but let's also talk about the invisible world a bit.

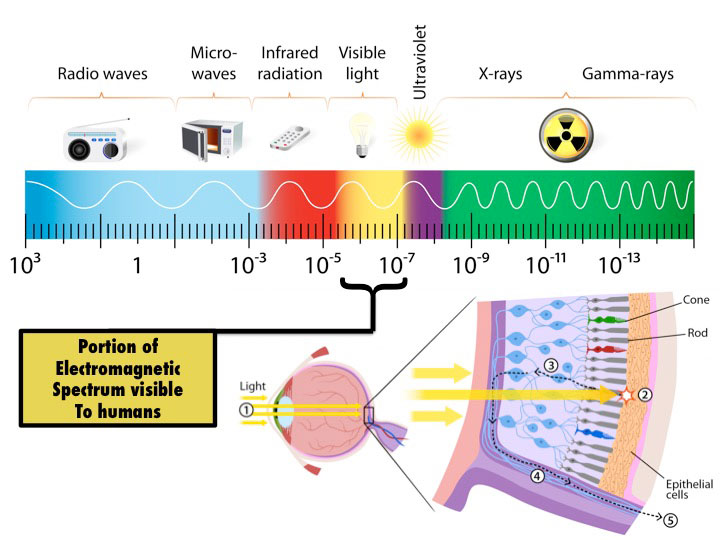

fig.1 - Electromagnetic spectrum.

Whatever you’re seeing, you’re looking at light and colors. But you’re not seeing all the colors that are there. There's an entire electromagnetic spectrum, and we can only see a little bit of it. And even in that little bit, there’s things we can’t see. This is because of the rods and cones in our eyes.

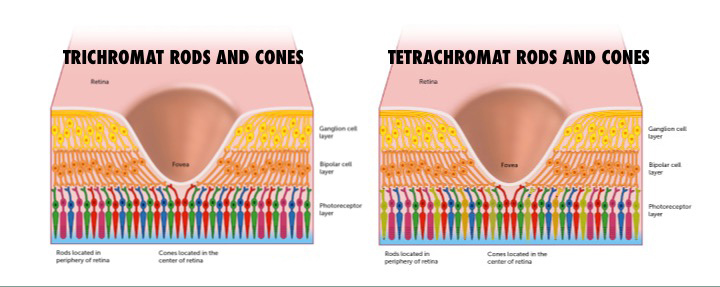

fig.2a - Tetrachromatism.

Most humans have 3 types of color receptive cones in their eyes. An estimated one percent of (mostly) women are called Tetrachromats and have a specific mutation that gives them 4 cones, allowing them to see colors we can't.

fig.2b - Dot test.

Tetrachromats can differentiate between subtle hues to see numbers in tests like the one above, and can unfocus their eyes while looking at something like the test below to see colors like yellowblue and reddish green that are present all around us, but unseen .

fig.2c - Yellowblue test.

Dogs only have 2 cones, meaning they can't distinguish between green, yellow, and red based on color.

fig.3a - Dog retinas.

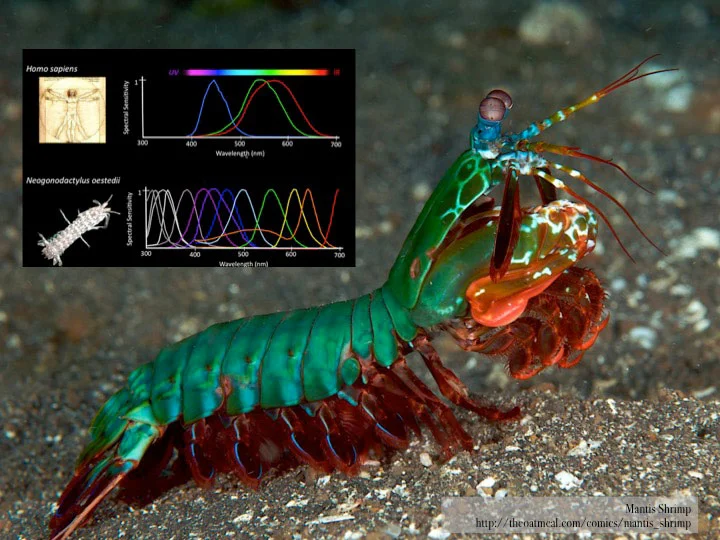

The mantis shrimp has 16 color receptor cones in its eyes and sees billions of colors we cannot. These colors are there even if we don't see them.

So there’s colors you can’t see in front of you, but there’s also things outside the visible spectrum.

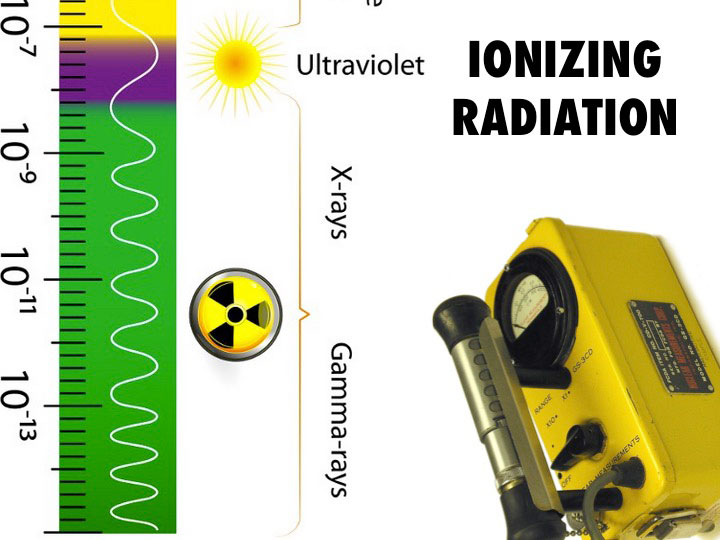

Real quick: On one side of the spectrum there's Ultraviolet light and ionizing radiation like X-rays and gamma rays (and they’re deadly: You'd need a Geiger counter to pick them up), on the other side of the spectrum there's Infrared light and non-ionizing radiation like microwaves and radio waves and VHF and UHF waves (which you could pick up on TV’s with rabbit ears), and deeper into those radio waves there's the super high frequencies and extremely high frequencies where wifi signal and cell phone reception is transmitted.

An app released in 2015 lets you see the radio waves around you based on location. You can’t see these waves filling this room right now, but they’re still there. So we’ve established there’s colors we can’t see, there’s spectrums we can’t see, what else? Well, another thing you’re probably not seeing is a Pokémon.

fig.6a - Sosin lads with Pokémon.

I have two boys, and they are crazy for Pokémon. We started playing together when it came out last July and we haven't stopped. That's not true of everyone, of course... four out of five Pokémon GO users have quit playing the game. But before you dismiss that, the game had A LOT of users at its peak.

fig. 6b - Pokémon GO Daily Unique Visitors through Dec 2016.

Do you remember those first few weeks? When you'd see roaming packs of teens on the streets, and everyone seemed to be on their phones looking at a Pokémon GO screen? It was a very visible trend: the connection to real-world locations ensured people were out and about and being seen.

Fig.7 - Casey Neistat's Vlog, July 13 2016.

Here's why it's notable: my kids experienced that at an early enough age that they're now conditioned to expect that level of mixed reality. We talk about kids who are "digital natives" and expect the conveniences older people are still getting used to, but the lower end of Generation Z and beyond are a step beyond that. They're Mixed Reality natives.

fig.8 - Oculus Rift.

They're going to be imagining in those invisible spaces we can't see, expecting there to be another dimension of reality only visible through digital means.

fig. 9 - Jean Baudrillard.

French philosopher Jean Baudrillard described a world like this in 1981, in his work Simulacra and Simulation. Baudrillard asked us to envision a map of an area, but then imagine that map being enlarged to the point that it was bigger than the area being mapped. Which is more real, the map or the smaller area it is mapping? Baudrillard saw that map as the hyperreal, a place where symbols are untethered from what they symbolize, an abstract space that feels more real than the reality we live in.

This is the difference between reality and reality shows. We give things that are famous more status in our lives because they represent something in our shared cultural experience. We feel like we know these celebrities and we care about them in ways we don't care about the real people around us, because they "matter". That's why the most desired career for young people right now is "YouTuber". Achieving that fame is an ideal that promises you too can matter and become more real.

fig.11 - Pokéradar.

Much like that electromagnetic spectrum, the hyperreal is surrounding us and we can't see it. But my kids can. They’re like those 4-coned tetrachromats who can see colors the rest of us can’t. Augmented Reality gives the hyperreal a substance, an actual plane to exist on. That's how you get a map bigger than the area it is mapping, by expanding dimensionally. They're going through this world knowing there's other layers that can go on top of it. And they're expecting that. We need to start expecting that too, if we're going to start visualizing where this goes.

It's going to hugely affect our self image. There's going to be our "meatspace" self, and then there's going to be our avatars. Which one is more real? I might look like this in the real world, but what if I never felt like my gender matched who I really was? What if my genetics got in the way of me showing who I am? I didn't pick this face or this body, but an avatar gives you the option to control and update your appearance at will. Millennials and Gen Z kids are built to have multiple identities, and soon those identities will become more real than their real identities.

fig.13 - Boston Dynamics Robotics.

It's also going to open whole new possibilities. Robotics right now is a clunky mess: we've seen those Boston Dynamics nightmare robots that look like headless horses, and engineers frantically trying to program robots that can get out of a car right.

You’ve probably seen things about how in the near future people are going to be falling in love with robots, essentially “lifelike” dolls that aren’t quite lifelike enough. But I think that’s a failure of imagination. I think the first robots that inspire love are going to be AI avatars that work with our avatars. The way people are going to cross the “uncanny valley” is by meeting halfway.

We don't need to be tethered to an idea of robots in the real world. Let's talk about virtual robots.

fig.15 - Virtual robots and butlers.

Maybe our physical selves won't have robot butlers, but our avatars will. What utilities would they perform for you? Well, there’s three things that AI’s and butlers do well: personalized MANAGEMENT (running your schedule, setting things up for you, keeping things tidy), ANALYSIS (crunching data, giving advice), and DISCOVERY (bringing you the paper, keeping you informed). Their functionality could bridge both the real world and the virtual world.

fig.16 - The roomba and virtual assistance.

You might turn to your virtual robot to keep your house in order, sending out fleets of roombas to clean. Maybe they'll handle your schedule or passwords. Maybe they'll procure things for you from the virtual world, or “grind” for you in a game while you do work elsewhere. Whatever they do, they will be serving a utility and making our lives easier. And if they’re going to be successful they will have a personality - a little formal, but with enough warmth to make interaction with them engaging.

fig.17 - AI Conversation Design metrics.

This is critical to understand: for AI to take off, it needs to be engaging. AI’s are judged not only by the amount of interactions they have (quantity) but also by the length of each interaction (quality). To survive in the marketplace, AI’s are going to have to ensure people want to use them, especially in these new digital hyperreal spaces. This is why the emerging field of conversation design is so crucial. These kids aren’t looking at digital spaces in utilitarian terms, they’re looking at it as a place they live in. They want to feel welcome.

fig.18a - Robot sidekicks.

The robot sidekick archetype has been around for decades, and it’s a natural visualization for a bot. Bots are just agents, helping users with a utility. In the hyperreal, they’re not trying to trick someone into dealing with them. They should be proud of the fact that they’re a bot, and they’re here to help.

fig.18b - Droids.

Think C3PO. He’s a protocol droid, and proud of the function he serves, not trying to conceal his lit eyes or wires in his midriff.

You want a quick pitch, I’ll give it to you license plate size: We’re talking Augmented Reality User Interfaces for Artificial intelligence, AR UI for AI.

fig.18c - License plate.

But no matter how they decide to market within the hyper-real, brands in this space will need to be experimental and let the experiments play out.

This is easier said than done, and the basis for the Puppy Paradox in AI: when you get a new puppy, you know it’s gonna whiz on the floor. You accept that and train it. Brands exploring this space for the first time are going to whiz on the floor a bit, but it’s vital to be patient and let them do that so they learn how to not do it.

As we explore and become accustomed to living in the hyperreal, whole content opportunities are going to become possible. They won't replace the real world, they'll be overlays to the real world. As our brains adapt to seeing the unseen, we will all start becoming tetrachromatic. So the question is: can you see it?